Duplicate of WebGL Shaders And Other Ways to Make a Website Unusable

Introduction

When we were preparing for the first Deltix Codeforces round, we wanted to create a new front page for Deltix, designed not for our business clients, but rather for talented developers who might want to join us. To make the website memorable, we wanted to create something unique, something that would distinguish us from other corporate front pages. What we ended up with, is a website that would feature procedural graphics producing a smooth high-resolution render. Some of the ideas we had early on were Julia set fractals and Conway's Game of Life (GoL) and we settled on GoL mostly because it's easier to theme a website around it.

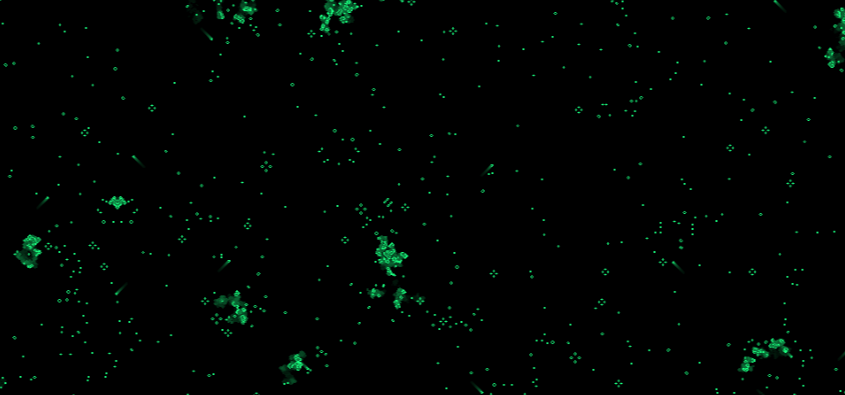

Game of Life is a cellular automaton, i.e. a grid of cells each having a discrete state and a set of rules that govern how these states change, and it's probably the most well-known one with iconic shapes such as gliders spaceships, and blinkers. As you may have noticed when opening the deltix.io, the Game of Life animation rendered by your browser runs very smoothly and at a high resolution. But how is it possible even on a fairly modest hardware?

The Easy Way to Make Your Website Unusable

The most obvious way to build such a visualization would be to do it in plain JavaScript. Drawing pixels on a canvas and then simulating the Game Of Life on a 2D array. Let's try doing that in JavaScript, at least the simulation part, in the most direct way possible:

Click to show code

// For our experiment let's use a small field which would correspond to a small screen

// SD (NTSC) resolution of 640 by 480 pixels

const Y = 480;

const X = 640;

const fieldA = new Array(Y);

const fieldB = new Array(Y);

for (var i = 0; i < Y; i++) {

fieldA[i] = Array.from({length: X}, () => Math.random() < 0.5);

fieldB[i] = Array(X);

}

// JavaScript's remainder % is not actually a modulo function so we have to use hacks

function mod(a, n) {

return ((a % n ) + n ) % n

}

function step(A, B) {

for (var i = 0; i < Y; i++) {

for (var j = 0; j < X; j++) {

// count neighbors

var count = 0

for (var dy = -1; dy <= 1; dy++) {

for (var dx = -1; dx <= 1; dx++) {

if (dx == 0 && dy == 0)

continue;

// get the neighboring cells, looping around the edges

count += A[mod(i+dy, Y)][mod(j+dx, X)]

}

}

if (A[i][j]) {

if (count == 2 || count == 3)

B[i][j] = true;

else

B[i][j] = false;

} else {

if (count == 3)

B[i][j] = true;

else

B[i][j] = false;

}

}

}

}

const start = Date.now();

for (var i = 0; i < Math.floor(I/2); i++) {

step(fieldA, fieldB);

step(fieldB, fieldA);

}

const stop = Date.now();

console.log("1 iteration takes ", (stop-start)/100, " ms");

Running this code in Chromium on a somewhat older i7-6700, gives us a time of about 19ms per iteration for 480p and 213ms for 1080p, with 51.76 and 4.69 frames per second, respectively. This may not be as slow as you might have expected, but it's still completely unacceptable, considering this doesn't include screen rendering and that it runs in the same thread as all other UI elements, which you might've noticed by the fact that the page becomes unresponsive while running this code.

The Harder Way to Make Your Website Unusable

While rendering graphics with a CPU has a long tradition going back all the way to the first computers, we have somewhat progressed beyond that by integrating a separate processor designed specifically to work with graphics, a GPU, into basically every device people use to browse the web and which usually only works with specific tasks (CUDA and other similar technologies being an exception to this) in a highly efficient parallelized manner. Accessing the graphics processor power from a web page is not as simple as just calling JavaScript functions, but it's a lot easier than you might've expected.

three.js

The secret to tricking a graphics processor, that usually draws 3D graphics, into running your code is to insert your code into the graphics pipeline. Three.js is the most popular framework for JavaScript 3D graphics. It is available in all kinds of shapes and sizes, such as NPM packages and CDN URLs, so it can be easily integrated into any project, it is well documented and has a pretty extensive corpus of StackOverflow threads. In our case, what we want to do is to create a simple scene with a single camera and a plane that will then be rendered by the GPU using our own custom shader.

A shader is basically just a code that can run on a GPU. Some shaders are used to generate information from a scene and store it in internal buffers, like depth information, or each pixel's distance from the observer. Others draw scenes onto a screen, i.e. what color should a pixel have based on what object the pixel shows, part of that object, the object part color based on lighting, texturing, shadows, etc. Others process all that data and apply effects like antialiasing and field depth based on all those buffers.

With three.js shaders are very easy to use. All you have to do is to create a ShaderMaterial with your shader code string as a parameter, which will then be compiled by the GPU driver for your specific system on the spot, and then you just apply that material to an object, a plane in our case.

Custom Shaders in three.js

Shaders are written in GLSL, also know as OpenGL Shading Language, which has a syntax similar to C (or JavaScript) but it lacks some of the features such as pointers and has a lot of restrictions (such as not being able to use variables as array indices), especially with WebGL1, which is an older version of WebGL still used by Apple (all iOS browsers and Safari on macOS [No longer true. As of July 18, 2021, Apple supports WebGL2] ). All these restrictions come from the fact that GLSL code is heavily optimized for the hardware the shader runs on to achieve the best performance possible and to work with very restricted memory and GPU processing capabilities.

To see GLSL in action, let's look at the following example of a webpage that uses a GLSL shader:

Click to show code

<html>

<body style="margin: 0;">

<p id="fragmentShader" hidden>

vec2 pos;

uniform float time;

uniform vec2 resolution;

void main(void) {

vec2 pos = 2.0*gl_FragCoord.xy / resolution.y - vec2(resolution.x/resolution.y, 1.0);

float d = sqrt(pos.x*pos.x + pos.y*pos.y)*10.0;

gl_FragColor = vec4(1.0+cos(time*0.97+d), 1.0+cos(time*0.59+d), 1.0+cos(-0.83*time+d), 2.0)/2.0;

}

</p>

<script type="module">

import * as THREE from 'https://cdn.skypack.dev/three@0.129.0';

const renderer = new THREE.WebGLRenderer();

renderer.setSize(window.innerWidth, window.innerHeight, window.devicePixelRatio);

document.body.appendChild(renderer.domElement);

const scene = new THREE.Scene();

const plane_geometry = new THREE.PlaneGeometry();

const plane_material = new THREE.ShaderMaterial( {

uniforms: {

time: {value: 1},

resolution: { value: new THREE.Vector2(window.innerWidth * window.devicePixelRatio, window.innerHeight * window.devicePixelRatio) }

},

fragmentShader: document.getElementById( 'fragmentShader' ).textContent

} );

const plane_mesh = new THREE.Mesh(plane_geometry, plane_material);

plane_mesh.scale.set(window.innerWidth/window.innerHeight, 1, 1);

scene.add(plane_mesh);

const camera = new THREE.PerspectiveCamera(45, window.innerWidth/window.innerHeight, 0.001, 1000);

camera.position.set(0, 0, 1);

scene.add(camera);

window.onresize = () => {

camera.aspect = window.innerWidth/window.innerHeight;

camera.updateProjectionMatrix();

renderer.setSize(window.innerWidth, window.innerHeight, window.devicePixelRatio);

plane_mesh.scale.set(window.innerWidth/window.innerHeight, 1, 1);

plane_material.uniforms.resolution.value = new THREE.Vector2(window.innerWidth * window.devicePixelRatio, window.innerHeight * window.devicePixelRatio);

}

const start = Date.now()/1000;

function animate() {

requestAnimationFrame(animate);

plane_material.uniforms.time.value = (Date.now()/1000) - start;

renderer.render(scene, camera);

}

animate();

</script>

</body>

</html>

Running this HTML in a web browser will display a 3D scene where the entire camera view is filled with a plane with our ShaderMaterial that uses our GLSL shader (fragmentShader element on the page).

uniform float time;

uniform vec2 resolution;

void main(void) {

vec2 pos = 2.0*gl_FragCoord.xy / resolution.y - vec2(resolution.x/resolution.y, 1.0);

float d = sqrt(pos.x*pos.x + pos.y*pos.y)*10.0;

gl_FragColor = vec4(1.0+cos(time+d), 1.0+cos(time*0.61+d), 1.0+cos(-0.83*time+d), 2.0)/2.0;

}

The simple shader we have here is a good enough basic GLSL syntax example. The entry function main, the same as in C, does not have any parameters or return values. Shader program inputs come from the scene as constants defined by OpenGL standards as well as so-called uniforms, which are custom pre-defined variables that can be passed to the shaders from the controlling program, three.js code in our case. Outputs are also handled by setting pre-defined variables and, in our case, are being rendered to the screen directly.

At this point, it's important to mention different types of shaders. Three.js's ShaderMaterial supports vertex shaders and fragment shaders. Vertex shaders run once for each vertex of an object and create variables that are then passed to Fragment shaders based on the object's geometry. Fragment shaders run once for each pixel (aka fragment) that is being rendered. These different types of shaders have different inputs and outputs, but other than that they operate exactly the same way.

In our example, we don't need a vertex shader but if we had one, we would pass it to the ShaderMaterial constructor in exactly the same way as we pass a fragment shader:

new THREE.ShaderMaterial( {

uniforms: {

...

},

fragmentShader: fragmentShaderCode,

vertexShader: vertexShaderCode } );

So what our fragment shader does is that it first converts the absolute coordinates (in pixels) of the fragment that is being rendered into relative coordinates, with Y coordinates going from -1.0 to 1.0 and X coordinates being scaled to viewport aspect ratio. This relative position is then stored as a 2D vector in a variable pos. We then calculate the distance from the viewport center to the pixel that is being rendered, scale it appropriately, assign a color based on the distance, and output it into the gl_FragColor variable which stores the pixel color that is being rendered on the screen.

Shader Trickery

It's important to remember that the shader is being rendered directly on the screen so moving the camera, for example by adding the following snippet, making the plane angled away from the camera, does not in any way affect the texture of the plane. You have to account for that within your shader changing the way it is being rendered according to geometry, but that is slightly too advanced math for this post.

camera.position.set(2, 0, 1); camera.lookAt(0, 0, 0);

But it's not always the case that shaders render directly to the screen. One option we have is to render to the internal buffer and then apply the rendered image as an object texture. To do that, we just change the render WebGLRenderer target and render to an internal buffer, which would then provide a texture to be used later.

Click to show code

<html>

<body style="margin: 0;">

<p id="fragmentShader" hidden>

vec2 pos;

uniform float time;

uniform vec2 resolution;

void main(void) {

vec2 pos = 2.0*gl_FragCoord.xy / resolution.y - vec2(resolution.x/resolution.y, 1.0);

float d = sqrt(pos.x*pos.x + pos.y*pos.y)*10.0;

gl_FragColor = vec4(1.0+cos(time*0.97+d), 1.0+cos(time*0.59+d), 1.0+cos(-0.83*time+d), 2.0)/2.0;

}

</p>

<script type="module">

import * as THREE from 'https://cdn.skypack.dev/three@0.129.0';

import { OrbitControls } from 'https://cdn.skypack.dev/three@0.129.0/examples/jsm/controls/OrbitControls.js';

const renderer = new THREE.WebGLRenderer();

const renderTarget = new THREE.WebGLRenderTarget(1024, 1024);

renderer.setSize(window.innerWidth, window.innerHeight, window.devicePixelRatio);

document.body.appendChild(renderer.domElement);

const scene1 = new THREE.Scene();

const plane_geometry = new THREE.PlaneGeometry();

const plane_material = new THREE.ShaderMaterial( {

uniforms: {

time: {value: 1},

resolution: { value: new THREE.Vector2(1024, 1024) }

},

fragmentShader: document.getElementById( 'fragmentShader' ).textContent

} );

const plane_mesh = new THREE.Mesh(plane_geometry, plane_material);

scene1.add(plane_mesh);

const camera1 = new THREE.PerspectiveCamera(45, 1, 1, 1000);

camera1.position.set(0, 0, 1);

scene1.add(camera1);

const scene2 = new THREE.Scene();

const cube_geometry = new THREE.BoxGeometry();

const cube_material = new THREE.MeshPhysicalMaterial({ map: renderTarget.texture });

const cube_mesh = new THREE.Mesh(cube_geometry, cube_material);

scene2.add(cube_mesh);

const camera2 = new THREE.PerspectiveCamera(45, window.innerWidth/window.innerHeight, 0.001, 1000);

camera2.position.set(1, 0.7, 2);

camera2.lookAt(0, 0, 0);

scene2.add(camera2);

const light = new THREE.PointLight();

light.position.set(1, 3, 2);

scene2.add(light);

const controls = new OrbitControls( camera2, renderer.domElement );

controls.autoRotate = true;

controls.enablePan = false;

window.onresize = () => {

camera2.aspect = window.innerWidth/window.innerHeight;

camera2.updateProjectionMatrix();

renderer.setSize(window.innerWidth, window.innerHeight, window.devicePixelRatio);

}

const start = Date.now()/1000;

function animate() {

requestAnimationFrame(animate);

plane_material.uniforms.time.value = (Date.now()/1000) - start;

renderer.setRenderTarget(renderTarget);

renderer.render(scene1, camera1);

renderer.setRenderTarget(null);

renderer.render(scene2, camera2);

controls.update();

}

animate();

</script>

</body>

</html>

You can use the mouse to look around in this example.

In this example, we create a WebGLRenderTarget 1024 by 1024 pixels, and then set the image we have in this render target as the texture of a cube, which we then render on the screen.

var renderTarget = new THREE.WebGLRenderTarget(1024, 1024);

...

var cube_material = new THREE.MeshPhysicalMaterial({ map: renderTarget.texture });

After that, in our updated render function, we render the texture by setting the render target to our in-memory buffer, rendering the scene with the plane, and then setting the render target to null. Then we normally render the scene with the cube.

renderer.setRenderTarget(renderTarget); renderer.render(scene1, camera1); renderer.setRenderTarget(null); renderer.render(scene2, camera2);

You might think that having several render calls would dramatically increase the render time and it does, but not by much because these scenes that we render into the buffer are really simple and they don't require too many calculations from the GPU. It's completely normal for a single frame in a modern AAA game, for example, to require hundreds of render calls for all kinds of shaders and effects, and they still usually target 60 fps on mid-range GPUs.

So why would we even need to do this just to simulate the Game of Life? Well, we don't need to, but if we want to run the simulation without showing every bit of it on screen we have to. By rendering our custom shader to a separate buffer we can then use that texture on a plane that we can freely move around to look at the close caption.

Finally Drawing GoL with GLSL

Based on everything we have learned so far, we can now finally start getting gliders running on a GPU. One important difference is that now we will be using a texture as a parameter in our shader. Doing this allows us to feed the previous iteration into the shader and use it to calculate the next iteration as well as seeding the initial state from a random texture generated in JavaScript.

We use a DataTexture to fill the texture, which simply constructs a texture from an array of values, a list of RGBA values in our case. And we plug that texture into the shader on the first frame and switch between two textures on future frames.

Click to show code

<html>

<body style="margin: 0;">

<p id="fragmentShader" hidden>

uniform vec2 resolution;

uniform sampler2D u_texture;

float mod(float x, float y) {

return x-y * floor(x/y);

}

float get(vec2 p) {

vec2 pos = p / resolution;

return texture2D(u_texture, pos).g;

}

void main(void) {

float in_c = get(gl_FragCoord.xy);

float out_c = 0.0;

float count = 0.0;

for (float x = -1.0; x < 2.0; x++) {

for (float y = -1.0; y < 2.0; y++) {

if (x == 0.0 && y == 0.0)

continue;

count += get( vec2(mod(gl_FragCoord.x+x, resolution.x), mod(gl_FragCoord.y+y, resolution.y )) );

}

}

if (in_c > 0.0)

if (count == 2.0 || count == 3.0)

out_c = 1.0;

else

out_c = 0.0;

else

if (count == 3.0)

out_c = 1.0;

else

out_c = 0.0;

gl_FragColor = vec4(0.1*out_c, out_c, 0.5*out_c, 1.0);

}

</p>

<script type="module">

import * as THREE from 'https://cdn.skypack.dev/three@0.129.0';

import { MapControls } from 'https://cdn.skypack.dev/three@0.129.0/examples/jsm/controls/OrbitControls.js';

const width = window.screen.width * window.devicePixelRatio;

const height = window.screen.height * window.devicePixelRatio;

const data = new Float32Array(4 * width * height);

for (var y = 0; y < height; y++)

for (var x = 0; x < width*4; x += 4)

data[y*width*4+x+1] = (Math.random() < 0.05)?1:0;

const initial_texture = new THREE.DataTexture( data, width, height,

THREE.RGBAFormat,

THREE.FloatType

);

const renderer = new THREE.WebGLRenderer();

const renderTargets = [

new THREE.WebGLRenderTarget(width, height),

new THREE.WebGLRenderTarget(width, height)

]

renderTargets[0].texture.magFilter = THREE.NearestFilter;

renderTargets[1].texture.magFilter = THREE.NearestFilter;

renderer.setSize(window.innerWidth, window.innerHeight, window.devicePixelRatio);

document.body.appendChild(renderer.domElement);

const gol_scene = new THREE.Scene();

const gol_plane_geometry = new THREE.PlaneGeometry();

const gol_material = new THREE.ShaderMaterial( {

uniforms: {

resolution: { value: new THREE.Vector2(width, height) },

u_texture: { value: initial_texture }

},

fragmentShader: document.getElementById( 'fragmentShader' ).textContent

} );

const gol_mesh = new THREE.Mesh(gol_plane_geometry, gol_material);

gol_plane_geometry.scale(width/height, 1, 1);

gol_scene.add(gol_mesh);

const gol_camera = new THREE.PerspectiveCamera(45, width/height, 0.1, 1000);

gol_camera.position.set(0, 0, 1);

gol_scene.add(gol_camera);

const output_scene = new THREE.Scene();

const output_plane_geometry = gol_plane_geometry.clone();

const output_material = new THREE.MeshBasicMaterial({ map: renderTargets[0].texture });

const output_mesh = new THREE.Mesh(output_plane_geometry, output_material);

output_scene.add(output_mesh);

const output_camera = new THREE.PerspectiveCamera(45, window.innerWidth/window.innerHeight, 0.001, 1000);

output_camera.position.set(0, 0, 0.2);

output_scene.add(output_camera);

const controls = new MapControls( output_camera, renderer.domElement );

controls.screenSpacePanning = true;

controls.enableRotate = false;

window.onresize = () => {

output_camera.aspect = window.innerWidth/window.innerHeight;

output_camera.updateProjectionMatrix();

renderer.setSize(window.innerWidth, window.innerHeight, window.devicePixelRatio);

}

function animate() {

requestAnimationFrame(animate);

renderer.setRenderTarget(renderTargets[0]);

renderer.render(gol_scene, gol_camera);

gol_material.uniforms.u_texture.value = renderTargets[0].texture;

output_material.map = renderTargets[0].texture;

renderer.setRenderTarget(null);

renderer.render(output_scene, output_camera);

controls.update();

var tmp = renderTargets[0];

renderTargets[0] = renderTargets[1];

renderTargets[1] = tmp;

}

animate();

</script>

</body>

</html>

You can use the mouse to look around in this example.

This example runs really well compared to our first CPU only-JavaScript test, easily hitting 60fps at 1080p on the integrated GPU of that same i7-6700, demonstrating how much of a difference picking the right tool for the job makes.

Let's start picking this example apart by looking at the shader code. We define two uniform values u_texture and resolution.u_texture has the type sampler2D which basically means an object that can be sampled from to get a color for each point that the sampler object is defined on. There are several types of sampler objects in GLSL, with sampler2D probably being the most common one, that is used to pass 2D textures to shaders. u_texture stores the current state of the GoL field. We define a function get to get the state of a particular cell on the field, which is used to get the state of a cell at position p (p being a 2D vector in pixel units). We also define a mod function to get the modulo for floats. Some GLSL implementations already have a mod for floats, but not this one.

Then in our main, we do everything the most straightforward way possible - count up our neighbors, set the new state based on our current state and the number of neighbors.

for (float x = -1.0; x < 2.0; x++) {

for (float y = -1.0; y < 2.0; y++) {

if (x == 0.0 && y == 0.0)

...

If you have any experience with C-like programming you might've cringed at these lines because they use floating-point number comparison, which is rightfully considered to be unreliable, but using integers here would require a lot of casts to and from integers, making the code a lot less readable.

When setting the output color we need to remember that this color is then used again to get the input state on the next frame. So we have to set the green channel to either 0.0 or 1.0 since we have decided to use it as the channel we store the state of the cell in. We, of course, can change that color when rendering to a screen if we want to, but it has to be done in a separate step that is not involved with this shader and only kicks in later in the rendering pipeline, which is exactly what we do on deltix.io with several renders, each applying their own effect, such as coloring, adding overlays and adding glitches.

In JavaScript, as mentioned previously, we start by creating a DataTexture of the desired size and filling it with either black or fully green pixels. We then create a pair of WebGLRenderTarget`s which we will swap around, changing which one is the input and which one is a target for our shader. We then set both of them to texture.magFilter = NearestFilter, which means that when zooming into the texture it appears crisp and pixelated instead of being blurry with the default LinearFilter. You will almost definitely prefer a more natural-looking blur for realistic textures, but this is not our case.

We then create all our objects for the scene making sure to supply the initial_texture we generated as a uniform to our fragment shader. When rendering a frame we first render the GoL simulation step by setting the target to one of our WebGLRenderTarget`s, rendering a new frame of GoL to it, and then setting that frame as the colormap for our plane shown on the screen as well as setting it as the input for the next GoL step. We then render the second scene to the screen and swap the render targets in preparation for the next frame.

And just like that, we have a beautiful very well-performing GoL simulation, but can we make it slightly prettier by adding some decay to dead cells. We can do this by setting the dying cells to some value other than 0.0 and decreasing the value of these non-alive cells on each frame.

Click to show code

void main(void) {

float in_c = get(gl_FragCoord.xy);

float out_c = 0.0;

float count = 0.0;

for (float x = -1.0; x < 2.0; x++) {

for (float y = -1.0; y < 2.0; y++) {

if (x == 0.0 && y == 0.0)

continue;

count += get( vec2(mod(gl_FragCoord.x+x, resolution.x), mod(gl_FragCoord.y+y, resolution.y )) ) > 0.6?1.0:0.0;

}

}

if (in_c > 0.6)

if (count == 2.0 || count == 3.0)

out_c = 1.0;

else

out_c = 0.5;

else

if (count == 3.0)

out_c = 1.0;

else

out_c = in_c < 0.05?0.0:in_c*0.95;

gl_FragColor = vec4(0.1*out_c, out_c, 0.5*out_c, 1.0);

}

You can use the mouse to look around in this example.

This is more or less what we have as the base layer on deltix.io which we then lay logos and effects on top of. We also spawn gliders and spaceships off-screen to keep the simulation dynamic even when the initial soup has died.

Conclusion

Writing shaders always seemed like witchcraft to me and it wasn't an unreasonable assumption considering the math involved in real shaders used in computer graphics, but it's not as daunting as it seemed. With what we have learned throughout our examples you can start writing your own custom shaders to generate all kinds of beautiful graphics and explore rendering techniques without the need for huge game engines such as Unreal and Unity and their restrictions and overheads or the complexities of setting up OpenGL rendering from C++.

Using GLSL Shaders from three.js is easy and, at least for me, fascinating. So go ahead and draw some fractals with marching rays or whatever else you can come up with.

Eugene Pisarchick

Eugene Pisarchick